Yanling Zuo (Minitab Statistical Software LLC) led the third of ten tutorials scheduled as part of the NISS Essential Data Science for Business series. Her tutorial session was titled, “Decision Trees, Gradient Boosted Decision Trees, and Random Forests: Powerful Predictive Analytics Tools Complementary to Regression.” This area is one of the Top 10 analytics key topics that are used in business today! (Review the Overview Presentation about all 10 Sessions).

Yanling began her session by providing a quick overview of predictive analytics which cover a large variety of techniques. These techniques can include machine learning, neural networks, text analysis, deep learning and artificial intelligence. For this session Yanling focused on highlighting the limitations of the top two methods, multiple linear regression and logistic regression and then used this to introduce classification and regression trees (CART), as well as gradient boosting and random forests as complementary techniques to these top two regression methods.

The Topics

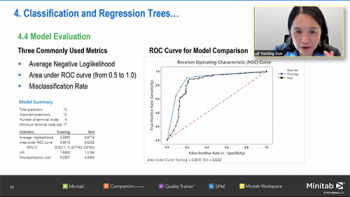

Yanling first provided a very thorough review of classification and regression trees. “Classification trees use a binary recursive partition algorithm to accomplish the goal. It starts splitting the mixed class group (parent node) into two purer groups (child nodes) by picking the best splitter.” Building from this basic principle she walked through an example using heart data and the Predictive Analytics methods in the Minitab software to demonstrate how this process of splitting takes place and how it results in finding optimal tree including an evaluation of the model used. Reviewing the pros and cons of this classification tree approach provided a natural transition to an approach that works to improve results.

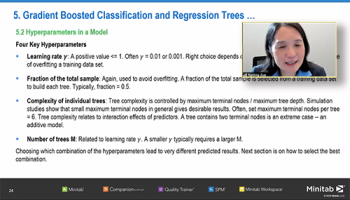

Yanling’s next step was to begin a discussion of gradient boosted regression trees. The idea here is try and improve the model fitting results from a single decision tree by building a sequence of regression trees. The response values for each tree being the residuals from previous trees. The idea behind this approach is that, “the model slowly improves the fitted values in areas where they do not perform well.” Once again, Yanling’s thoroughness was evident as she worked her way through how this approach works and why improvements are possible. Yanling then switched over to using the Minitab TreeNet Classification methods to demonstrate how this is applied within the heart dataset.

Finally, Yanling explained the use of random forests as another way to try overcome the randomness of a single decision tree. She demonstrated how random forests typically contain extremely large trees that use bootstrap samples, the predicted value for a continuous response being the average of the predicted values across individual trees. For a categorial response, the predicted value is the class that has the majority votes from individual trees. Whether researchers use a gradient boosted or a random forest approach is one of the issues that Yanling discussed.

Access to Materials

Once again, this NISS tutorial session was loaded with lots of details related not only to each of the methods discussed, but also within the examples implemented in the software. If you were not able to attend this live session you can still access a recording of the session along with links to the slides that Yanling used during this session. Use the Registration Option "Post Session Access" on the event webpage, pay the $35 fee, and NISS will provide you with access to the materials for this session. Or register for the full series of ten tutorials, and NISS will provide all the links as well.

What’s Up Next?

The next NISS Essential Data Science for Business tutorial is scheduled to take place on Wednesday, November 18, 2020. Victor Lo (Fidelity Investments) along with Dominique Haughton (Bentley University) and Jonathan Haughton (Suffolk University) will team up as instructors for the next topic, “Causal Inference and Uplift Modeling.” Register today!

Further schedule dates/topics include:

December 2, 2020 - Ming Li: "Deep Learning"

See the Data Science Event Series here for the following upcoming topics:

- "Prescriptive Analytics and Optimization"

- "Unstructured Data Analysis"

- "Social Sciences and Data Science Ethics"

- "Domain Knowledge and Case Studies"

- "Analytical Consulting, Communication and Soft Skills"