Are you exploring data science applications? Are you looking to expand and update your foundational understandings in data science? Are you trying to deal with un-structured data such as text and image data? If the answer is yes to any one of these questions, then this tutorial session is for you! Deep learning certainly has gained traction in the last few years and range of application areas only seems to be expanding, especially when non-traditional data sources are involved. It is to the point where deep learning methods have now become essential tools in data scientist’s toolbox.

On Tuesday, December 2nd, Ming Li, (Senior Research Scientist at Amazon and adjunct faculty of University of Washington) led a three-hour workshop that was filled with explanation, examples and then hands on application.

The Topics

Ming Li began by providing an overview of how the professional areas of statistics, statistical engineering and data science have evolved over the years. Included in this overview Ming demonstrated how the tools have grown as well, especially neural networks. He provided detailed explanations of how deep learning works using feed forward neural network (FFNN) for traditional structured dataset applications, convolutional neural network (CNN) for image related applications, and recurrent neural network (RNN) for text related applications. Through these examples Ming established that working neural network models contain many layers (i.e. the depth of the network is deep), and that to achieve near-human-level accuracy the deep neural network needs huge training datasets (for example, millions of labeled pictures for image classification problem) and enormous computation power.

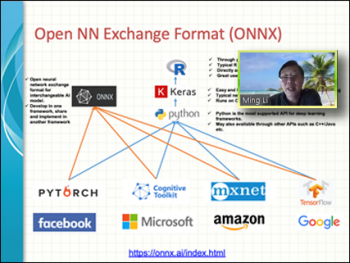

Ming commented on the different software applications that support this type of modeling. He described the capabilities the Keras package that builds upon and extends the capabilities of R, as well as the role of applications such as Python, TensorFlow, PyTorch and others, including a description of the Open NN Exchange Format (ONNX) that is working to provide a format for interchangeable AI models.

Ming used three detailed hands on examples to demonstrate these methods. In the first hands on section Ming walked through an example of a feed forward neural network that involved the classification of handwritten images of numerals. The second hands on example extended this modeling process of the handwritten numeral data as Ming created and trained a convolutional neural network in Keras. In his final hands on session Ming used a recurrent neural network to analyze movie review text to identify positive reviews.

Access to Materials

Once again, the Essential Data Science tutorials provide so many details! Models, methods, software, and examples! If you were not able to attend this live session you can still access a recording of the session along with links to the slides that the three presenters used during this session. Use the Registration Option "Post Session Access" on the event webpage, pay the $35 fee, and NISS will provide you with access to all the materials for this session. Or register for the full series of ten tutorials, and NISS will provide all the links as well.

What’s Up Next?

Here are the topics of the final five tutorial sessions that will presented beginning in January, 2021. NISS is in the process of confirming details for the following sessions:

- "Prescriptive Analytics and Optimization"

- "Unstructured Data Analysis"

- "Social Sciences and Data Science Ethics"

- "Domain Knowledge and Case Studies"

- "Analytical Consulting, Communication and Soft Skills"

Information for registering and attending these sessions will be posted on the NISS website. Coming soon!