Overview

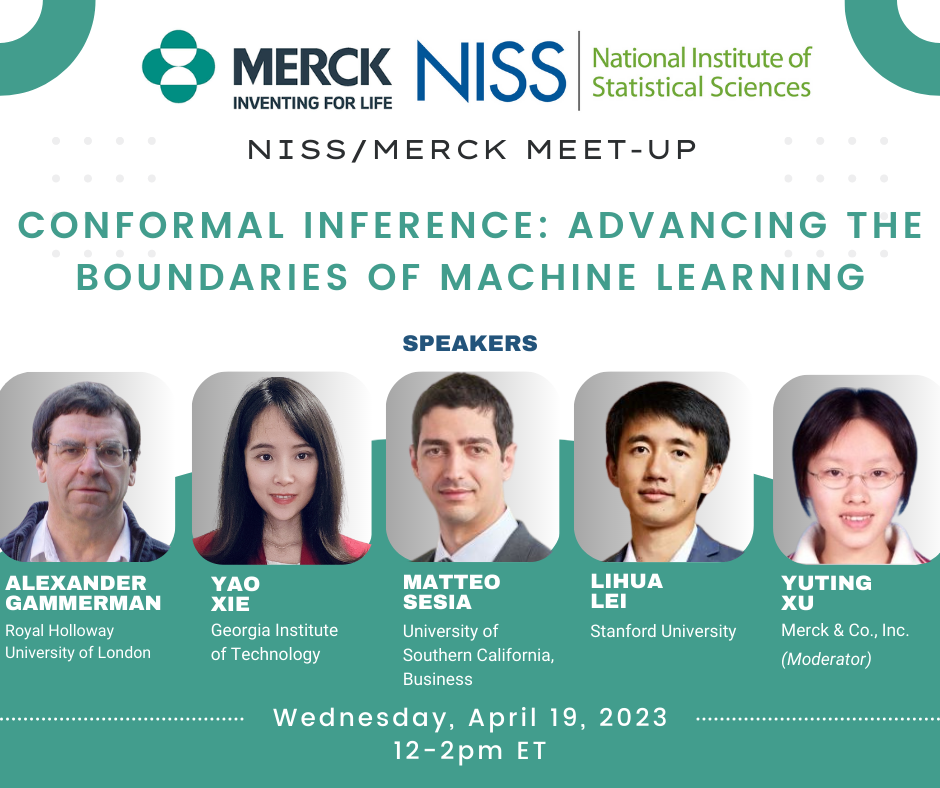

NISS/Merck Meetup on Conformal Inference: Advancing the Boundaries of Machine Learning on12:00 PM to 2:00 PM EST on April 19, 2023.

Conformal inference is a statistical framework that provides a way to make predictions with prediction intervals and associated confidence levels in machine learning. Unlike traditional point estimates, this approach constructs intervals for individual data points, allowing for more robust and reliable predictions. The confidence levels provided by the prediction intervals enable users to assess the uncertainty of the predictions and make informed decisions based on the level of confidence. Notably, prediction intervals are produced without assumption on the prediction algorithms and data distribution, making conformal inference a flexible and powerful tool in machine learning. Conformal inference is used for both classification and regression and is currently an active area of research in statistics and computer science. This framework has the potential to advance the boundaries of machine learning by enabling more accurate and trustworthy predictions with applications in a variety of fields, including finance, medicine, and environmental science.

Register on Zoom Here!

Speakers

Prof. Alexander Gammerman, Royal Holloway University of London

Prof. Yao Xie, Georgia Institute of Technology

Prof. Matteo Sesia, University of Southern California, Marshall School of Business

Prof. Lihua Lei, Stanford University

Moderator

Yuting Xu, Merck & Co., Inc.

Agenda

Prof. Alexander Gammerman, Royal Holloway University of London

Presentation Title: "Conformal prediction and testing for change-point detection"

Abstract: A recurrent problem in many domains is the accurate and rapid detection of a change in the distribution of observed values. Instances of this problem, which are generally referred to as change-point detection, are found in fault detection in vehicle control system, detection of onset of an epidemic and many others. The talk reviews an approach to the problem using the conformal method, a modern framework for hedged prediction and testing with proven guarantees under minimal assumptions.

Reference: Algorithmic Learning in a Random World, Springer, 2nd ed. 2022 Edition. In particular, the relevant information is given in Part I (chapter 2) and Part III (chapter 8) of the book.

Prof. Yao Xie, Georgia Institute of Technology

Presentation Title: "Sequential conformal prediction for time series"

Abstract: We develop a general framework for constructing distribution-free prediction intervals for time series. Theoretically, we establish explicit bounds on conditional and marginal coverage gaps of estimated prediction intervals, asymptotically converging to zero under additional assumptions. We obtain similar bounds on the size of set differences between oracle and estimated prediction intervals. Methodologically, we introduce computationally efficient algorithms called EnbPI and SPCI, which avoid data-splitting, do not require exchangeability, and are computationally efficient by avoiding retraining and thus scalable to sequentially producing prediction intervals. We perform extensive simulation and real-data analyses to demonstrate the effectiveness of our methods compared with existing approaches.

Coauthor: Chen Xu

https://arxiv.org/abs/2010.09107

https://arxiv.org/abs/2212.03463

Prof. Matteo Sesia, University of Southern California, Marshall School of Business

Presentation Title: "Conformal inference is (almost) free for neural networks trained with early stopping"

Abstract: Early stopping based on hold-out data is a popular regularization technique designed to mitigate overfitting and increase the predictive accuracy of neural networks. Models trained with early stopping often provide relatively accurate predictions, but they generally still lack precise statistical guarantees unless they are further calibrated using independent hold-out data. This paper addresses the above limitation with conformalized early stopping: a novel method that combines early stopping with conformal inference while efficiently recycling the same calibration data. This leads to models that are both accurate and able to provide exact predictive inferences without multiple data splits nor overly conservative adjustments. Practical implementations are developed for different learning tasks -- outlier detection, multi-class classification, regression -- and their competitive performance is demonstrated on real data.

Co-authors: Ziyi Liang and Yanfei Zhou (University of Southern California)

Reference: https://arxiv.org/abs/2301.11556

Prof. Lihua Lei, Stanford University

Presentation Tile: "Conformal Risk Control"

While improving prediction accuracy has been the focus of machine learning in recent years, this alone does not suffice for reliable decision-making. Deploying learning systems in consequential settings also requires calibrating and communicating the uncertainty of predictions. Conformal prediction can be viewed as a framework to control the "miscoverage" risk for prediction sets. For decision making, the "miscoverage" risk may be brittle and point predictions may be preferred to prediction sets. In a line of work, we extend conformal inference to control other notions of risks for any type of predictions. As with conformal prediction, our approaches are able to wrap around any black-box machine learning algorithms and provide finite-sample risk control either in expectation or with high probability. We use the frameworks to provide new calibration methods for several core machine learning tasks, with detailed worked examples in computer vision and natural language processing.

Relevant papers: paper #1, paper #2, paper #3

About the Speakers

Prof. Alexander Gammerman is a Fellow of the Royal Statistical Society, Fellow of the Royal Society of Arts, and Member of British Computer Society. He chaired and participated in organising committees of many international conferences and workshops on Machine Learning and Bayesian methods in Europe, Russia and in the United States. He is also a member of the editorial board of the Law, Probability and Risk journal.

Prof. Alexander Gammerman is a Fellow of the Royal Statistical Society, Fellow of the Royal Society of Arts, and Member of British Computer Society. He chaired and participated in organising committees of many international conferences and workshops on Machine Learning and Bayesian methods in Europe, Russia and in the United States. He is also a member of the editorial board of the Law, Probability and Risk journal.

Professor Gammerman's current research interest lies in application of Algorithmic Randomness Theory to machine learning and, in particular, to the development of confidence machines (conformal predictors). Areas in which these techniques have been applied include medical diagnosis, forensic science, genomics, environment and finance. Professor Gammerman has published about two hundred research papers and several books on computational learning and probabilistic inference.

Prof. Yao Xie is an Associate Professor, Harold R. and Mary Anne Nash Early Career Professor at Georgia Institute of Technology in the H. Milton Stewart School of Industrial and Systems Engineering and Associate Director of the Machine Learning Center. She received her Ph.D. in Electrical Engineering (minor in Mathematics) from Stanford University and was a Research Scientist at Duke University.

Prof. Yao Xie is an Associate Professor, Harold R. and Mary Anne Nash Early Career Professor at Georgia Institute of Technology in the H. Milton Stewart School of Industrial and Systems Engineering and Associate Director of the Machine Learning Center. She received her Ph.D. in Electrical Engineering (minor in Mathematics) from Stanford University and was a Research Scientist at Duke University.

Her research lies at the intersection of statistics, machine learning, and optimization in providing theoretical guarantees and developing computationally efficient and statistically powerful methods for problems motivated by real-world applications. She received the National Science Foundation (NSF) CAREER Award in 2017, INFORMS Wagner Prize Finalist in 2021, and the INFORMS Gaver Early Career Award for Excellence in Operations Research in 2022. She is currently an Associate Editor for IEEE Transactions on Information Theory, IEEE Transactions on Signal Processing, Journal of the American Statistical Association, Theory and Methods, Sequential Analysis: Design Methods and Applications, INFORMS Journal on Data Science, and an Area Chair of NeurIPS in 2021 and 2022 and ICML in 2023.

Prof. Matteo Sesia is an assistant professor in the department of Data Sciences and Operation, at the USC Marshall School of Business. His research is focused on developing data science methods combining the power of machine learning algorithms with the reliability of rigorous statistical guarantees. While pursuing this goal, he enjoys dividing his time between theoretical, methodological, computational, and applied work. His doctoral research earned the Jerome H. Friedman Applied Statistics Dissertation Award from the Stanford Statistics Department in 2020.

Prof. Matteo Sesia is an assistant professor in the department of Data Sciences and Operation, at the USC Marshall School of Business. His research is focused on developing data science methods combining the power of machine learning algorithms with the reliability of rigorous statistical guarantees. While pursuing this goal, he enjoys dividing his time between theoretical, methodological, computational, and applied work. His doctoral research earned the Jerome H. Friedman Applied Statistics Dissertation Award from the Stanford Statistics Department in 2020.

Prof. Lihua Lei is an Assistant Professor of Economics and Assistant Professor of Statistics at the School of Humanities and Sciences at Stanford University, Graduate School of Business. He mainly works at the intersection of econometrics and statistics. A large portion of his research focuses on empowering statistical reasoning with machine learning and augmenting machine learning with statistical reasoning.

Prof. Lihua Lei is an Assistant Professor of Economics and Assistant Professor of Statistics at the School of Humanities and Sciences at Stanford University, Graduate School of Business. He mainly works at the intersection of econometrics and statistics. A large portion of his research focuses on empowering statistical reasoning with machine learning and augmenting machine learning with statistical reasoning.

The central theme is preserving the statistical rigor of confirmatory analysis without compromising the freewheeling nature of exploratory analysis.

About the Moderator

Dr. Yuting Xu is an Associate Principal Scientist in the Biometrics Research Department at Merck & Co. She obtained her BS in Physics from Tsinghua University, her M.S.E. in Computer Science, and her Ph.D. in Biostatistics from Johns Hopkins University. Dr. Xu’s research focuses on developing statistical and machine learning methods for analyzing big complex data in pharmaceutical research and preclinical development.

Dr. Yuting Xu is an Associate Principal Scientist in the Biometrics Research Department at Merck & Co. She obtained her BS in Physics from Tsinghua University, her M.S.E. in Computer Science, and her Ph.D. in Biostatistics from Johns Hopkins University. Dr. Xu’s research focuses on developing statistical and machine learning methods for analyzing big complex data in pharmaceutical research and preclinical development.

Her collaborations expand across imaging, neuroscience, cheminformatics, and protein engineering.

NISS/Merck Meetup Series

This virtual webinar series co-organized by Merck focuses on topics and issues of interest to biostatisticians, statisticians and epidemiologists related to the pharmaceutical industry. Visit all NISS/Merck Meetup recordings of previous webinars here: https://www.youtube.com/playlist?list=PLoRtupvDJTjsFY7Yx3hSzfhW0TNlrlkIO

NISS Affiliate Liaison and Organizer:

Dr. Vladimir Svetnik is a Distinguished Scientist in the Biometrics Research department at Merck & Co. With over 30 years of experience working as a statistician and manager of statistics departments in the medical diagnostics and pharmaceutical industry, Vladimir has established himself as one of the leaders in the field. He and his colleagues are known for pioneering the applications of machine learning to drug discovery, including cheminformatics, electrophysiology, medical imaging, sleep research, and others.

Dr. Vladimir Svetnik is a Distinguished Scientist in the Biometrics Research department at Merck & Co. With over 30 years of experience working as a statistician and manager of statistics departments in the medical diagnostics and pharmaceutical industry, Vladimir has established himself as one of the leaders in the field. He and his colleagues are known for pioneering the applications of machine learning to drug discovery, including cheminformatics, electrophysiology, medical imaging, sleep research, and others.

Vladimir's contributions have expanded the Biometrics Research department, turning it into a highly productive data science organization working across all phases of drug development. Vladimir has published over 100 research papers and holds over 20 patents. He earned his M.S. in Electrical Engineering and Ph.D. in Applied Mathematics from Peter the Great St. Petersburg Polytechnic University in the former Soviet Union.

Event Type

- NISS Hosted

- NISS Sponsored